- Jan 09, 2026

The Voice Will Be the Interface of the Future: OpenAI Opens New Horizons in Audio Technology

- Jan 02, 2026

- Technology

Staff Report: PNN

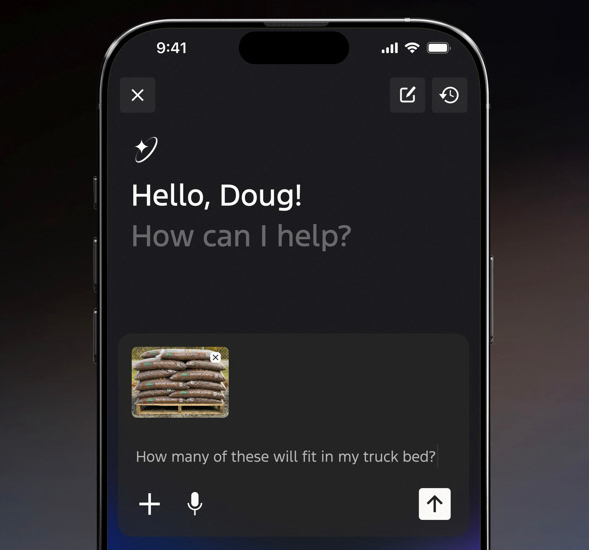

OpenAI is betting big on audio technology as the next step in artificial intelligence. The goal is not just to make ChatGPT’s voice sound more natural, but to prepare a fully audio-driven personal device. According to a recent report, over the past two months, OpenAI has consolidated multiple engineering, product, and research teams with the primary goal of developing next-generation audio models. As a result of this initiative, the company plans to release an audio-first device in the market within about a year.

This move reflects the broader direction of the technology industry. Gradually, screens are becoming less central, and audio is emerging as the primary interface. Already, over one-third of households in the United States use smart speakers. Meta recently added a feature to their Ray-Ban smart glasses that uses multiple microphones to ensure conversations are heard clearly even in noisy environments. Meanwhile, Google is experimenting with converting search results into conversation-based audio summaries, and Tesla is integrating xAI’s chatbot “Grok” into its vehicles, enabling voice control for tasks ranging from navigation to temperature adjustments.

It’s not just the major tech companies that have joined this race; new startups are also entering. However, not all attempts have been smooth. The screenless Humane AI Pin became an example of failure despite enormous investment. Similarly, a pendant called Friend AI, which claims to record conversations constantly for companionship, raised concerns about privacy and personal freedom. Work is also underway on an AI ring, expected to reach the market around 2026, allowing users to literally speak through their hands.

Though the devices may differ, the underlying concept is clear: audio will be the dominant medium of control in the future. Homes, cars, and even the human body are gradually becoming interfaces.

OpenAI’s new audio model, expected in early 2026, will be able to speak with a more human-like voice, handle interruptions during conversations, and respond while the user is speaking. While current models are limited in these aspects, this represents a significant advancement. OpenAI is also considering a family of devices, including smart glasses or screenless smart speakers, which will behave not just as tools but as companions.

Analysts believe that the vision behind this initiative is influenced by former Apple design chief Jony Ive, now part of OpenAI’s hardware efforts. He has long advocated for reducing device dependency and sees audio-first design as an opportunity to correct past technological mistakes.

All in all, OpenAI’s move signals that future AI will not just be seen—it will be heard, and listening may become the primary experience of interacting with technology.